Traditional computers have changed our lives since centuries, and at that stage the computing power was considered revolutionary. However, with the amount of data produced as a result of scientific research and the emergence of the Internet, the need for greater computing power has been growing exponentially. In the research industry supercomputers are not even close to being adequate to run certain simulations which take days to compute, that too provided the proper conditions have been found. If, as Moore’s Law states, the number of transistors on a microprocessor continues to double every 18 months, the year 2020 or 2030 will find the circuits on a microprocessor measured on an atomic scale. And the logical next step will be to create quantum computers, which will harness the power of atoms and molecules to perform memory and processing tasks. Quantum computers have the potential to perform certain calculations significantly faster than any silicon-based computer.

Classical computers encode information in bits. Each bit can take the value of 1 or 0. These 1s and 0s act as on/off switches that ultimately drive computer functions. Quantum computers, on the other hand, are based on quantum bits (qubits), which operate according to two key principles of quantum physics: superposition and entanglement. Superposition means that each qubit can represent both a 1 and a 0 at the same time. Entanglement means that qubits in a superposition can be correlated with each other; that is, the state of one (whether it is a 1 or a 0) can depend on the state of another. Using these two principles, qubits can act as more sophisticated switches, enabling quantum computers to function in ways that allow them to solve difficult problems that are intractable using today’s computers.

Quantum computers are incredibly powerful machines that take a new approach to processing information. Built on the principles of quantum mechanics, they exploit complex and fascinating laws of nature that are always there, but usually remain hidden from view. By harnessing such natural behavior, quantum computing can run new types of algorithms to process information more holistically. They may one day lead to revolutionary breakthroughs in materials and drug discovery, the optimization of complex manmade systems, and artificial intelligence.

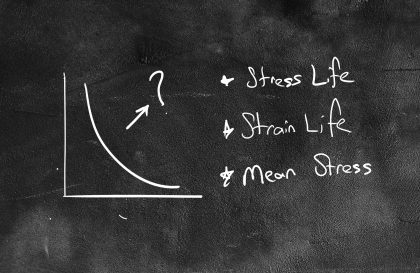

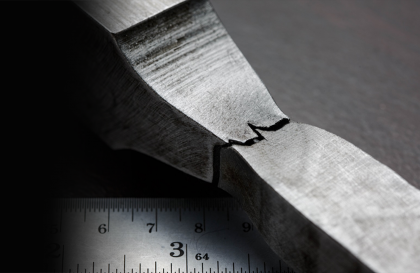

Both the benefits and the challenges of quantum computing are inherent in the physics that permits it. To perform a computation with many such qubits, they must all be sustained in interdependent superpositions of states — a “quantum-coherent” state, in which the qubits are said to be entangled. That way, a tweak to one qubit may influence all the others. This means that somehow computational operations on qubits count for more than they do for classical bits. The computational resources increase in simple proportion to the number of bits for a classical device, but adding an extra qubit potentially doubles the resources of a quantum computer. This is why the difference between a 5-qubit and a 50-qubit machine is so significant. To carry out a quantum computation, you need to keep all your qubits coherent. And this is very hard. Interactions of a system of quantum-coherent entities with their surrounding environment create channels through which the coherence rapidly “leaks out” in a process called decoherence. Researchers seeking to build quantum computers must stave off decoherence, which they can currently do only for a fraction of a second. That challenge gets ever greater as the number of qubits — and hence the potential to interact with the environment — increases. This is largely why, even though quantum computing was first proposed by Richard Feynman in 1982 and the theory was worked out in the early 1990s, it has taken until now to make devices that can actually perform a meaningful computation.

There’s a second fundamental reason why quantum computing is so difficult. Like just about every other process in nature, it is noisy. Random fluctuations, from heat in the qubits, say, or from fundamentally quantum-mechanical processes, will occasionally flip or randomize the state of a qubit, potentially derailing a calculation. This is a hazard in classical computing too, but it’s not hard to deal with — you just keep two or more backup copies of each bit so that a randomly flipped bit stands out as the odd one out.

Researchers working on quantum computers have created strategies for how to deal with the noise. But these strategies impose a huge debt of computational overhead — all your computing power goes to correcting errors and not to running your algorithms.

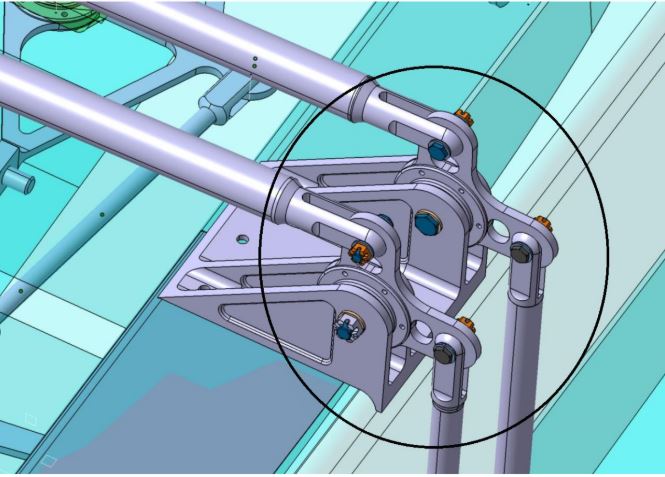

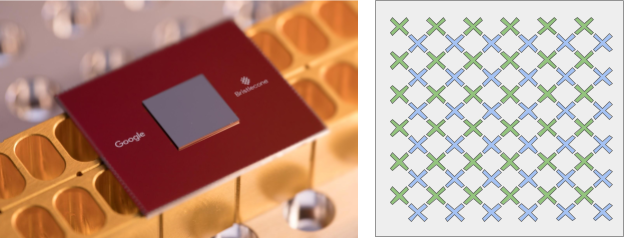

Despite these reasons for making the life of a quantum research scientist a living nightmare, Google has announced that its Quantum AI Lab is taking steps towards quantum supremacy with the development of “Bristlecone”, its latest quantum processor. Bristlecone is intended to allow researchers to run tests on system error rates, scalability of their quantum information storage solutions, and to test various applications, such as in machine learning. Google previously developed a nine-qubit processor, which demonstrates promisingly low error rates: one per cent for readout, 0.1 per cent for a single qubit and 0.6 per cent for a two-qubit gate. Their new 72-qubit device is of a similar design to its predecessor, but scaled up significantly. In order to protect the fragile quantum systems from outside stimuli and keep error rates low, quantum computers today must be run at close to absolute zero. Under these conditions, Google is hoping that they can achieve error rates as low as with their nine-qubit processor. This is necessary if a quantum computer is to stand a chance of achieving quantum supremacy.

Read the original blog post here.

Sources:

- Google unveils new 72-qubit quantum processor

- The Era of Quantum Computing Is Here. Outlook: Cloudy

- 49-qubit quantum computer presented by Intel

- How Quantum Computers Work

- IBM