We humans are very good at collaboration. For instance, when two people work together to carry a heavy object like a table or a sofa, they tend to instinctively coordinate their motions, constantly recalibrating to make sure their hands are at the same height as the other person’s. Our natural ability to make these types of adjustments allows us to collaborate on tasks big and small.

Imagine you’re lifting a couch with a friend. You’re both at opposite ends, and need to communicate as to when to heft it up. You could go for it at the count of three, or maybe, if you’re mentally in sync, with a nod of the head.

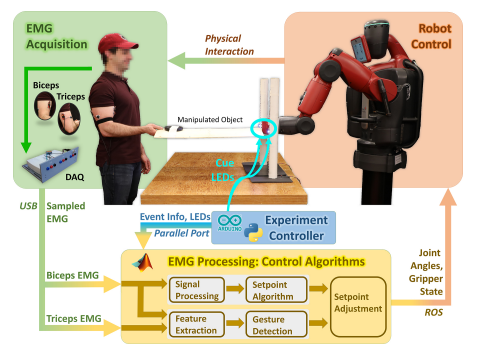

Now let’s say you’re doing the same with a robot— what’s the best way to tell it what to do, and when? Roboticists at MIT have created a mechanical system that can help humans lift objects, and it works by directly reading the electric signals produced by a person’s biceps.

It’s a noteworthy approach because their method is not the standard way that most people interact with technology. We’re used to talking to assistants like Alexa or Siri, tapping on smartphones, or using a keyboard, mouse, or trackpad. Or, the Google Nest Hub Max, a smart home tablet with a camera, can notice a hand gesture indicating “stop” that a user makes when they want to do something like pause a video. Meanwhile, robot cars—autonomous vehicles—perceive their surroundings through instruments like lasers, cameras, and radar units.

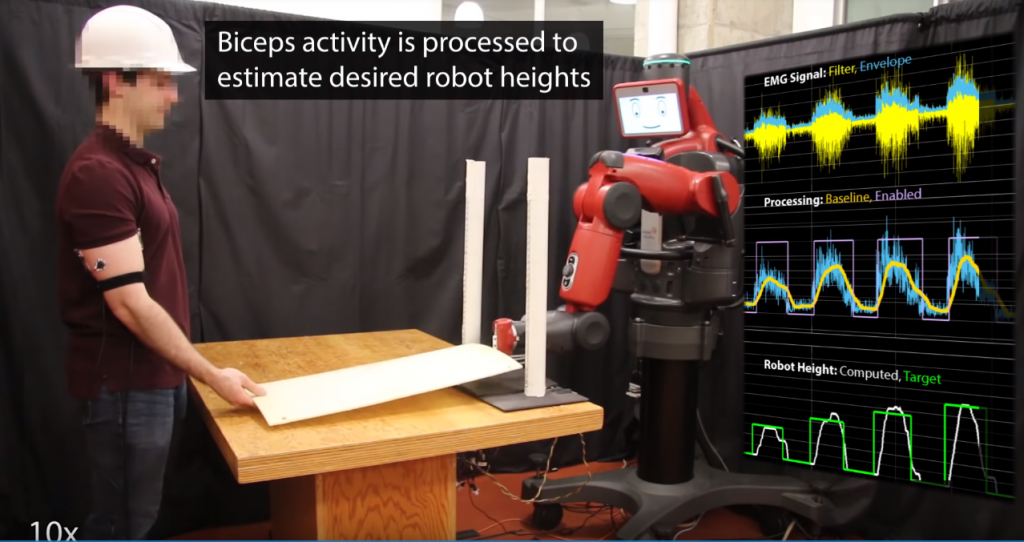

Researchers at MIT’s Computer Science and Artificial Intelligence Laboratory (CSAIL) recently showed that a smoother robot-human collaboration is possible through a new system they developed, where machines help people lift objects by monitoring their muscle movements.

The bicep-sensing robot works thanks to electrodes that are literally stuck onto a person’s upper arm and connected with wires to the robot. “Overall the system aims to make it easier for people and robots to work together as a team on physical tasks,” says Joseph DelPreto, a doctoral candidate at MIT who studies human-robot interaction, and the first author of a paper describing the system. Working together well usually requires good communication, and in this case, that communication stems straight from your muscles. “As you’re lifting something with the robot, the robot can look at your muscle activity to get a sense of how you’re moving, and then it can try to help you.”

The system also interprets more subtle motions, something it can do thanks to artificial intelligence. To tell the robotic arm to lift up or down in a more nuanced way, a person with the electrodes on their upper arm can move their wrist slightly up twice, or down once, and the bot does your bidding. To accomplish this, DelPreto used a neural network, an AI system that learns from data. The neural network interprets the EMG signals coming from the human’s biceps and triceps, analyzing what it sees some 80 times per second, and then telling the robot arm what to do.